Will AI Shape Our Communities?

By Vasavi Sai Nunna

Every scroll, like, and click you make online teaches an algorithm what kind of content to show you next. Over time, your feed begins to feel familiar—almost intuitive. It reflects your interests, your humor, and your values.

But what if the information you’re seeing isn’t the full picture? And what if the bigger issue isn’t just what you see—but that your neighbors, classmates, and community members are all seeing entirely different versions of reality?

This is the quiet power of AI-driven personalization. While algorithms shape individual feeds, they also shape how communities form opinions, understand one another, and engage in civic life. In a media-saturated world, filter bubbles don’t just influence beliefs—they influence relationships.

Most major platforms like TikTok, YouTube, Instagram, and Facebook rely on machine learning algorithms to decide what content to show each user.

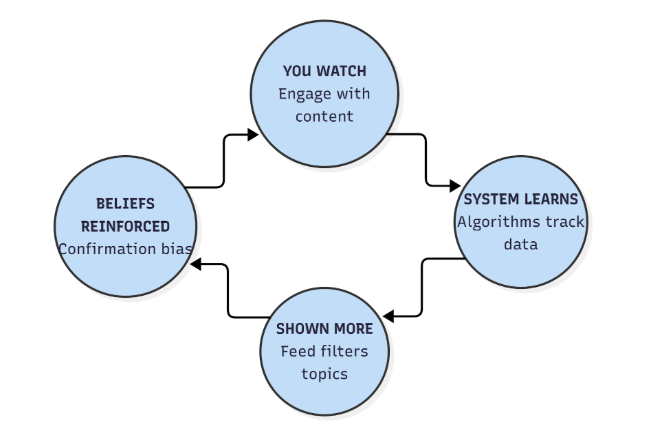

- First, these systems track your behavior—what you watch, how long you stay, what you like or share.

- Then, it looks for patterns. If you tend to engage with emotional videos, political commentary, or wellness content, the algorithm learns.

- Finally, it recommends more of the content that has kept you engaged before.

This process isn’t inherently harmful. Personalization can be useful, helping people discover content they genuinely enjoy. But when personalization becomes the dominant way we encounter information, it changes how communities function. Instead of sharing common news stories or cultural reference points, we are sorted into micro-audiences, each living inside a personalized information environment.

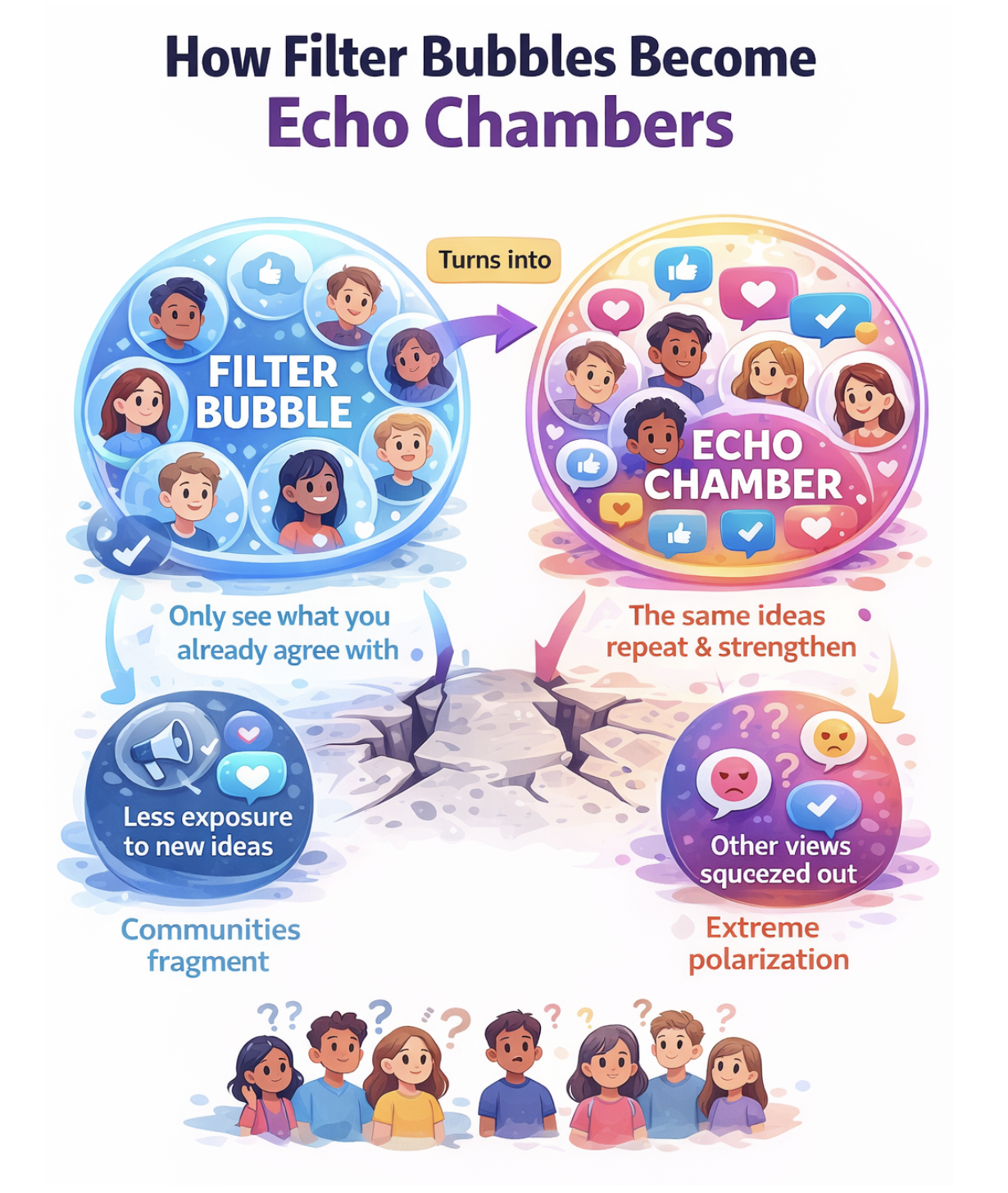

That’s where filter bubbles emerge. Coined by author Eli Pariser, a filter bubble describes the personalized universe of information each of us inhabits online—one that largely reflects our existing beliefs and interests. Inside these bubbles, we mostly see content that confirms what we already think and rarely encounter perspectives that challenge us.

That’s where filter bubbles emerge. Coined by author Eli Pariser, a filter bubble describes the personalized universe of information each of us inhabits online—one that largely reflects our existing beliefs and interests. Inside these bubbles, we mostly see content that confirms what we already think and rarely encounter perspectives that challenge us.

Over time, filter bubbles can turn into echo chambers: social spaces where the same ideas circulate repeatedly and dissenting voices are dismissed or ignored. When this happens at scale, communities begin to fragment. People aren’t just disagreeing more—they’re struggling to understand how others could see the world so differently.

This matters because healthy communities depend on shared understanding. When people no longer encounter the same information, it becomes harder to build trust, practice empathy, or engage in meaningful dialogue. Disagreement turns into suspicion, and curiosity gives way to certainty.

At the heart of this problem is the attention economy. AI systems don’t have values or beliefs—they optimize for engagement. They learn which emotions keep users scrolling and amplify content that triggers strong reactions, whether that’s outrage, fear, or validation. As a result, sensational or extreme content often travels faster than balanced or contextual information. These systems create feedback loops:

Over time, beliefs can harden—not because someone set out to manipulate you, but because the system is doing exactly what it was designed to do. You’re not just consuming content; you’re training the system that shapes your information world.

Consider a real life example: Ava, a 16-year-old student who becomes interested in healthy eating. After watching and liking a few videos about “clean eating,” her social media feed quickly fills with calorie-counting tips, warnings about “toxic” ingredients, and extreme diet challenges. Balanced advice from registered dietitians or body-positive creators slowly disappears.

At first, Ava feels informed. But soon, her filter bubble pulls her away from shared experiences and community life: she becomes anxious about food, avoids social events involving meals, and feels disconnected from friends who don’t share her concerns.

As individuals, we can take steps to improve our own relationships with algorithmic personalization:

- Be Aware of Your Engagement. Before liking or commenting, ask: Do I want more of this in my feed?

- Practice Cross-Community Listening. Follow creators and perspectives outside your comfort zone—not to argue, but to understand.

- Retrain Your Feed. Use “Not Interested,” clear watch histories, or intentionally search for diverse content.

- Talk Outside Your Bubble. Conversations with people who think differently—online or offline—help rebuild shared understanding.

- Build Offline Curiosity. Read, watch, or attend something your algorithm would never recommend. Libraries, community events, and classrooms remain powerful spaces for shared learning.

Media literacy must also be a community practice. Educators, librarians, youth leaders, and community organizations can create spaces where people reflect on what they’re seeing online, compare different feeds or search results, and ask deeper questions: Why am I seeing this? What’s missing? Who benefits from this narrative?

These conversations matter. Media literacy isn’t just about spotting misinformation or avoiding manipulation—it’s about restoring the conditions for dialogue, empathy, and participation in civic life.

You don’t need to delete your apps or reject technology altogether. But awareness is essential. When communities learn to engage with media more intentionally—pausing before sharing, questioning what’s absent, and staying open to difference—we don’t just step outside our filter bubbles. We strengthen our ability to connect, collaborate, and coexist in a complex information environment.

In a fragmented media landscape, awareness isn’t just power. It’s how communities stay connected.

MediaEd Insights - January 2026 - Building a Community

Opening Essay: Being Villagers Together by Catherine Morris

Review: Will AI Shape Our Communities? by Vasavi Sai Nunna

Research Brief: Building Inclusive Global Alliances by Yonty Friesem

Case Study: How Media Literacy is Reconnecting Our Elders by Aurra Kawanzaruwa

Comments(0)